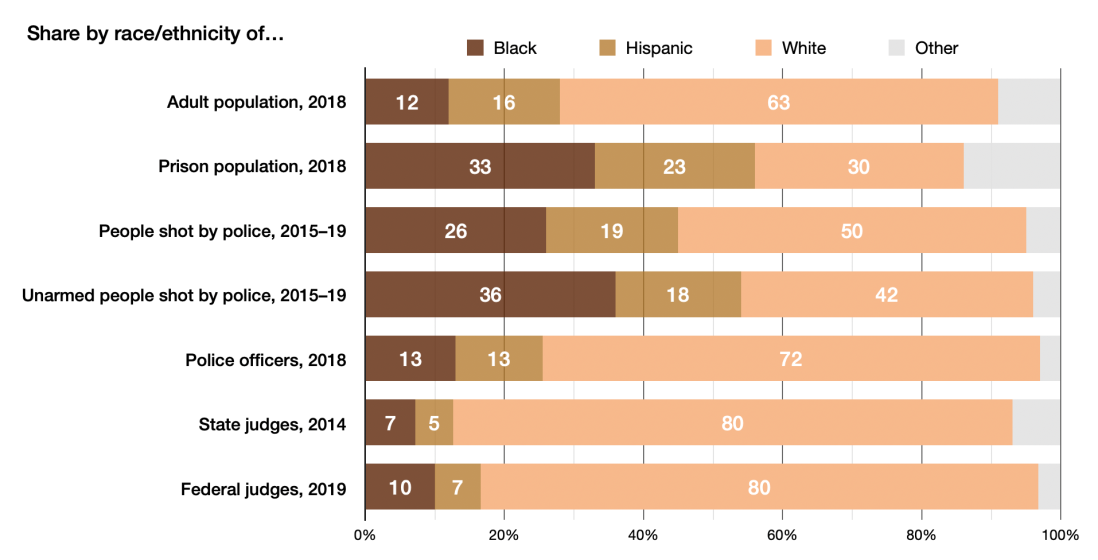

Data models are foundational to information processing, and in the digital world they stand in for the real world. When machines are used to make algorithmically-informed decisions, their algorithms are informed by the data models they use. And the data structured by data models is numerical of necessity, since machines must perform logical operations, and not creative interpretations. It follows that data used in machine operations are machine-language translations of real-world phenomena, expressed in a data model designed for efficient processing. It should not be surprising then that as information systems increasingly make decisions that affect people and communities, their operations are in a very direct sense an extension of the messy human world. This has resulted in information systems that reflect human racism, sexism, and many otherisms, with real-world harm to individuals and communities. But given the black-box nature of “machine learning” algorithms, how do we know what happens inside the black box? How can we document machine bias so as to design algorithms that don’t perpetuate social harms?

Category: Information Literacy

The “Privacy Paradox” and Our Expectations of Online Privacy

The analysis presented here is based on my review of existing research on privacy expectations of people who create online content. This analysis concerns the full range of user interactions on what we used to call Web 2.0 platforms, focusing on social media systems like Facebook, Twitter, Reddit, Instagram, and Amazon. User interactions include posting original content (text, photos, videos, memes, etc.), and commenting on content posted by others. Reviews on Amazon and comments on news websites count as online content in this analysis. Photos uploaded to photo-sharing sites and original videos posted to YouTube also count. Anything in any format created by an individual from their own original thought and creative energy, and subsequently posted by the individual on social media platforms, counts as online content. In most instances the online content or interaction contains or is traceable to personally identifiable information, even if this is unintended by the content creator.

The Future Of News and How To Stop It

Today we have an abundance of information resources undreamed of in past centuries, but are exposed via the Internet to more disinformation than any previous generation. Digital media technologies are being massively leveraged to spread propagandistic messages designed to undermine trust in all forms of information, and to stimulate strongly affective responses and an entrenchment of political, cultural, and social divisions. The critical demands of the digital age have outpaced development of a corresponding information literacy. Meanwhile journalists are accused by authoritarian leaders of being “enemies of the people” while facing layoffs from newsrooms no longer supported by a sustainable business model. Short of reinvention, professional journalism will be increasingly endangered and the relevance of news organizations will continue to decline. In this paper I propose a new collaborative model for news production and curation combining the expertise of librarians, journalists, educators, and technologists, with the objectives of addressing today’s information literacy deficit, bolstering the credibility and verifiability of news, and restoring reasoned deliberation in the public sphere.